Constrained Retrieval-Augmented Language Model

Project Goals

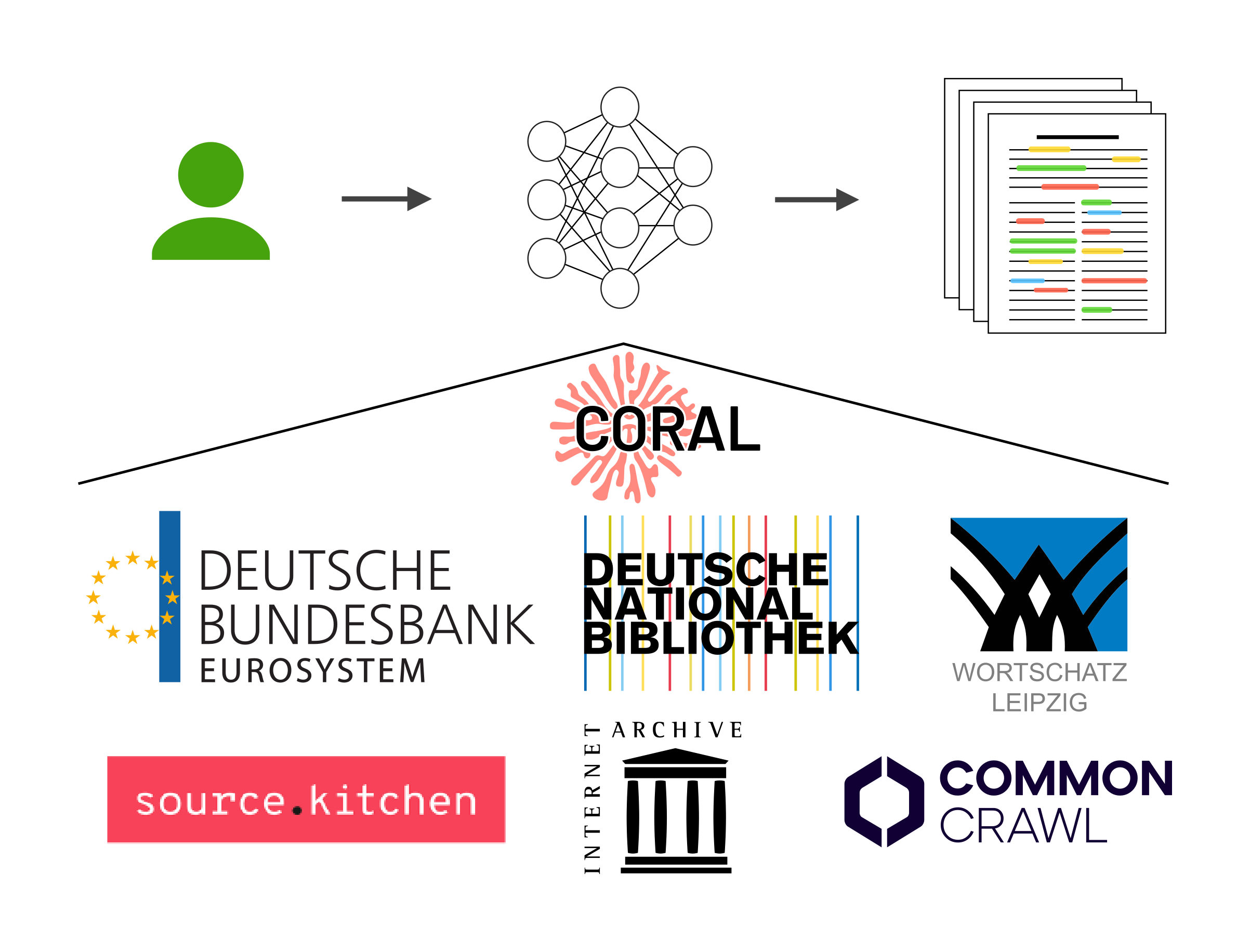

The CORAL project aims to research methods for the construction and use of large language models (LLMs) that are subject to legal, technical, and qualitative constraints. With the fulfillment of legal requirements for the training data of LLMs and the referential provenance of the generated texts, our focus is on two central criteria that are indispensable for the professional use of language models. To this end, we are researching new methods for the constrained training of LLMs and the retrieval augmented generation (RAG) of texts.

Data

CORAL uses data from the partners involved. This includes the digital holdings of the German National Library (DNB), and web crawls from the Internet Archive and the Common Crawl, amounting to petabytes, many years of European-language news crawls from Wortschatz Leipzig, and proprietary data from the financial sector. Apart from the Common Crawl, this data has so far not been usable for training LLMs, as it is not made publicly available in its original form for legal reasons. We are therefore investigating the extent to which this data can be used legally for the training of LLMs in obfuscated form and how far the obfuscation of the data may go in order to construct useful large language models.

Approach

The project investigates whether practically usable language models can be trained on texts that are only available in various restricted forms. In addition, we develop methods to generate texts that incorporate domain knowledge and provide source references. In particular, the reproduction of training data should be avoided, while accurately referencing the specified sources. These methods will be evaluated through extensive experiments and tested in collaboration with partners from the financial sector, GLAM institutions (galleries, libraries, archives, and museums), and private industry.

Innovations and Perspectives

Innovative results and insights are expected in three key areas of language model development and use across society, science, and industry: (1) consideration of previously restricted training data; (2) model architectures that incorporate constraints, such as preventing the reproduction of training data; and (3) referencing relevant and reliable sources on which the generated text is based. The exemplary transfer of these approaches will clearly demonstrate both their flexibility and effectiveness.

Research Questions

Central research questions are: Which training methods and model architectures are robust against data constraints? How resource-efficient can useful large language models be trained? Which methods of obfuscation, un-learning and negated augmentation effectively prevent the disclosure of protected data? How can the transparency and soundness, originality, and referenceability of the generated texts be ensured? How vulnerable are the methods used to secure the training data of LLMs?

CORAL is thus making important contributions to the future establishment of a German market for large language models.

Events

Poster Presentation. The German Commons – 154 Billion Tokens of Openly Licensed Text for German Language Models. Lukas Gienapp at All Hands Meeting of the Network of German Centres of Excellence for AI Research in November 2025

Poster Presentation. CORAL: Constrained Retrieval-Augmented Language Models. Christopher Schröder at All Hands Meeting of the Network of German Centres of Excellence for AI Research in November 2025

Dagstuhl Seminar. Retrieval-Augmented Generation – The Future of Search? Martin Potthast (co-organizer), Sebastian Heineking (participant)

Keynote Presentation. Who writes the web? Who reads it? Who judges it? And who reports back to us? About authenticity in information retrieval. Martin Potthast at 15th International Conference on Innovative Concepts and Theories in Information Retrieval (ICTIR 2025) in July 2025

Poster Presentation. Learning Effective Representations for Retrieval Using Self-Distillation with Adaptive Relevance. Lukas Gienapp at 15th International Conference on Innovative Concepts and Theories in Information Retrieval (ICTIR 2025) in July 2025

Conference Talk. Axioms for Retrieval-Augmented Generation. Jan-Heinrich Reimer at 15th International Conference on Innovative Concepts and Theories in Information Retrieval (ICTIR 2025) in July 2025

Conference Talk. The Viability of Crowdsourcing for RAG Evaluation. Lukas Gienapp at 48th International ACM SIGIR Conference on Research and Development in Information Retrieval in July 2025

Invited talk. Retrieval Technologies for the Infinite Index. Martin Potthast at the 21st Conference on Database Systems for Business, Technology and Web (BTW) in Bamberg in March 2025.

Invited talk. Self-Training for Sample-Efficient Active Learning for Text Classification with Pre-Trained Language Models. Christopher Schröder at the MLT Meetings at DFKI (Online) in March 2025.

Publications

Data

German Commons. A 154-billion-token pre-training corpus of openly licensed German text.

Awards

Best Paper Honourable Mention Award. For "The Viability of Crowdsourcing for RAG Evaluation". At 48th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2025)

Best Paper Honourable Mention Award. For "Axioms for Retrieval-Augmented Generation". At 15th International Conference on Innovative Concepts and Theories in Information Retrieval (ICTIR 2025)